Are we moving into a post-apps world?

I keep asking myself: what will AI UX actually look like when the dust settles?

My vision, and the one we cultivate at Mirai is that it won’t be more chat, and it won’t be one mega-agent trying to run your entire life.

Andrej Karpathy put it sharply: chatting with LLMs today feels like using an 80s terminal before the GUI was invented. Text is the command line, the “desktop” hasn’t arrived yet. What’s coming will be visual, generative, and adaptive — interfaces created on demand, shaped to your context, and dissolved once their purpose is served.

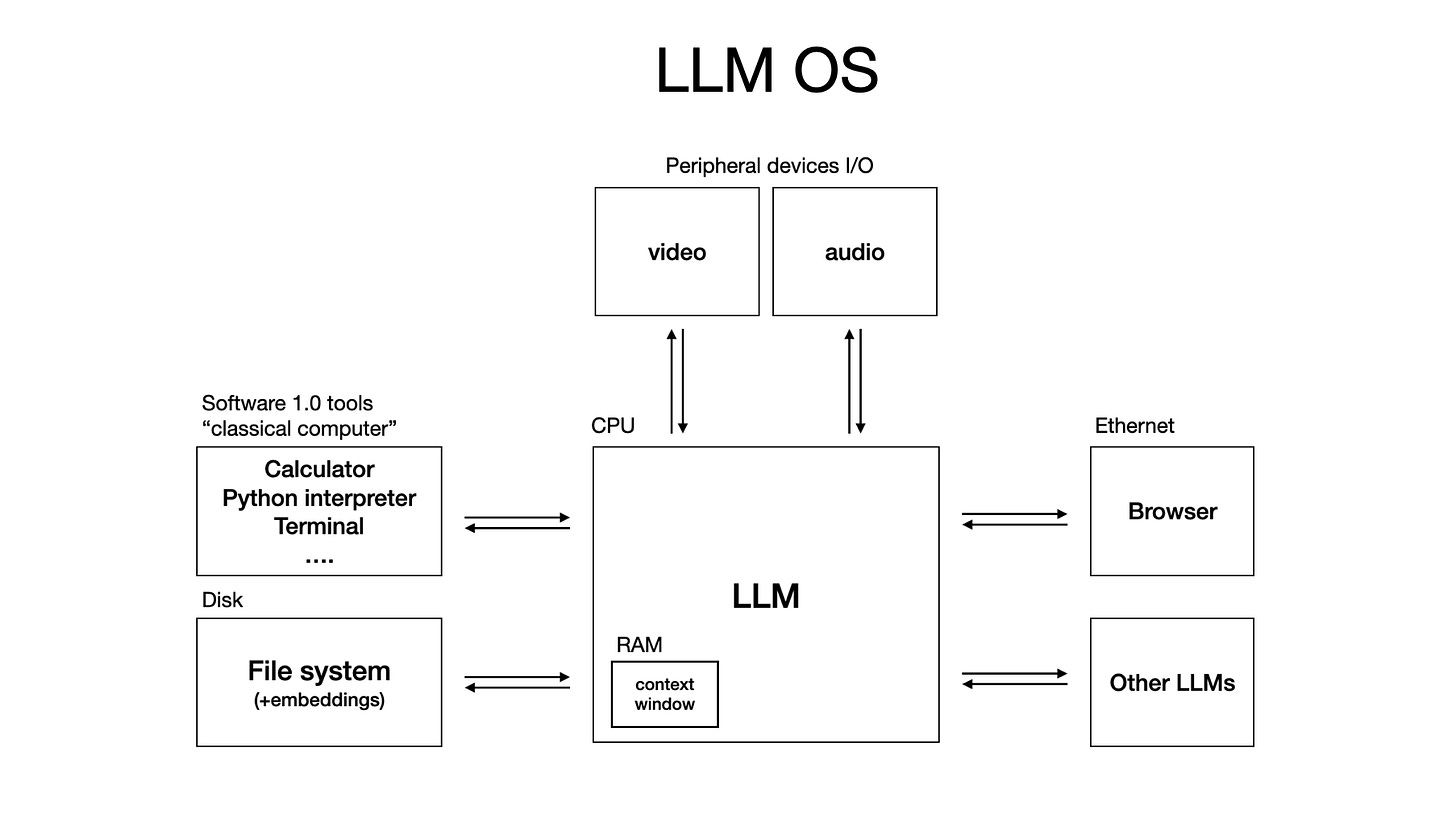

We can call it an AI OS, or a post-app ecosystem.

And for this OS, in order to be fast (otherwise it won’t be competitive with what we have now), the infrastructure is crucial. Both device capabilities, model weights, and hybrid pipelines where part of the function never leaves your device, another lives in the cloud, but can be summoned any minute, blazing fast.

Software 3.0 is a post-app world

Right now, we’re stuck in a loop where apps promise help but deliver interruption. You open X to message someone, only to resurface 30 minutes later, having never sent the message, but fully consumed by a debate you didn’t need.

The default move in a meeting is still to flip the phone face-down so it stops hijacking your focus.

Do you control your phone, or does it control you?

It’s the product logic of an app-first world.

Yes, even in your favorite AI chatbot or code assistant, they’re still built on patterns of the previous cycle of applications.

But the task which hasn’t been solved yet is building the experience where you don’t need to switch between apps.

Imagine: you won’t open Airbnb to book a place for your next European summer.

You’ll say: “Nice, Fri–Sun, have a terrace, under €300.” The agent already knows your calendar, your travel habits, your budget, the time when you arrive, and whether you’re traveling alone or with family. It negotiates with services and composes 3 options with map, weather, and hidden fees normalized. One tap and you’re booked.

Imagine you’ll say: “Play my summer ’25 vibe,” and it will blend your history, notes, and social graph into a playlist. Without you asking what the most played song of that summer was, whether you listened to it on Spotify or YouTube, or shared it via HomePod with your family.

We’re not there yet. Even the latest agent demos confuse retrieval with experience: you ask for help shopping, and you get a table of links, half of them hallucinated or broken. You end up doing the work you thought you delegated.

We’ve spent years mapping user journeys — step one, step two, step three.

But the future isn’t about dragging users through steps. It’s about control maps — the moments where they must decide, redirect, or confirm.

This connects to what Karpathy calls Software 3.0. Instead of monolithic apps, you have modular building blocks — models, tools, skills — stitched together in real time. The user doesn’t describe how to reach an outcome; they just declare what they want. The intent layer negotiates the rest.

And the canvas itself will evolve. It won’t be static apps. It will be fluid, ephemeral micro-interfaces: a generative skeleton of charts, sliders, cards, maps, or diagrams, composed just for the task at hand. Sometimes more procedural, like React components. Sometimes more immersive, like a diffusion model dreaming up an entire output.

But we don’t yet have the infrastructure for this shift

This requires a hybrid architecture by design. Latency, privacy, and cost push as much as possible on device; complexity and long context fall back to the cloud. It also requires accepting a model mix instead of monoculture.

Most of the cost and friction in the real world is not training new models, but running them efficiently. Whoever owns how inference happens locally ends up owning how the experience feels.

That’s why “more chat” isn’t enough. Text is fine for specifying intent, but poor for decisions. Decisions need visual state, comparison, one-tap confirmation. They need UIs that appear only when your judgment matters — and disappear once it doesn’t.

If it sounds too futuristic for your taste, let me remind you of the last time you couldn’t spot that viral jumping rabbits video as AI-generated.

I’ve been there a few years ago, talking about hyper-personalization of content where anybody can create anything. Flash forward to today — AI content is already a norm, and vibe-coding turned app creation into something simpler and more democratic.

But of course, some things don’t change: human choice points remain, taste still matters, great design still sets the standard. AI removes screens, not judgment. And of course, there is still a lot to be built along the way.

And that’s why at Mirai we focus on the on-device layer.

Latency is UX. Privacy is distribution. Cost is survival.

For AI to become the next interface, it has to live where the user lives: close to intent, close to context, close to control.

— Dima